WINTER SCHOOL

"Generative AI Techniques for Signal and Information Processing Applications"

October 31, 2023

Chairs:

Prof. Mu-Yen Chen, National Cheng Kung University, Taiwan

Prof. Mingyi He, VP, APSIPA

With its low-power characteristics, the ADI Max78000 is perfectly suited for edge devices where energy efficiency is crucial for prolonged operation without frequent recharging or replacing batteries. By carefully optimizing power consumption during AI model inference and leveraging the CNN accelerator, the Max78000 can achieve remarkable AI performance while conserving energy, making it an excellent choice for battery-powered and energy-sensitive applications.

Inconclusion, by harnessing the strengths of CNN acceleration and low-power characteristics, the integration of ADI Max78000 with Generative AI unlocks new possibilities for innovative and energy-efficient edge AI applications. Whether it's enhancing image synthesis, generating realistic content, or enabling interactive voice assistants, this powerful combination opens up endless opportunities to create intelligent and sustainable AI solutions for the future.

Many classical speech processing problems, such as enhancement, source separation and dereverberation, can be formulated as finding mapping functions to transform noisy input to clean output spectra. As a result, DNN-transformed speech usually exhibits a good quality and a clear intelligibility under adverse acoustic conditions. Finally, our proposed deep regression framework was also tested on recent challenging tasks in CHiME-2, CHiME-4, CHiME-5, CHiME-6, REVERB and DIHARD evaluations. Based on the top quality achieved in microphone-array based enhancement, separation and dereverberation, our teams often scored the lowest error rates in almost all the above-mentioned open evaluation scenarios.

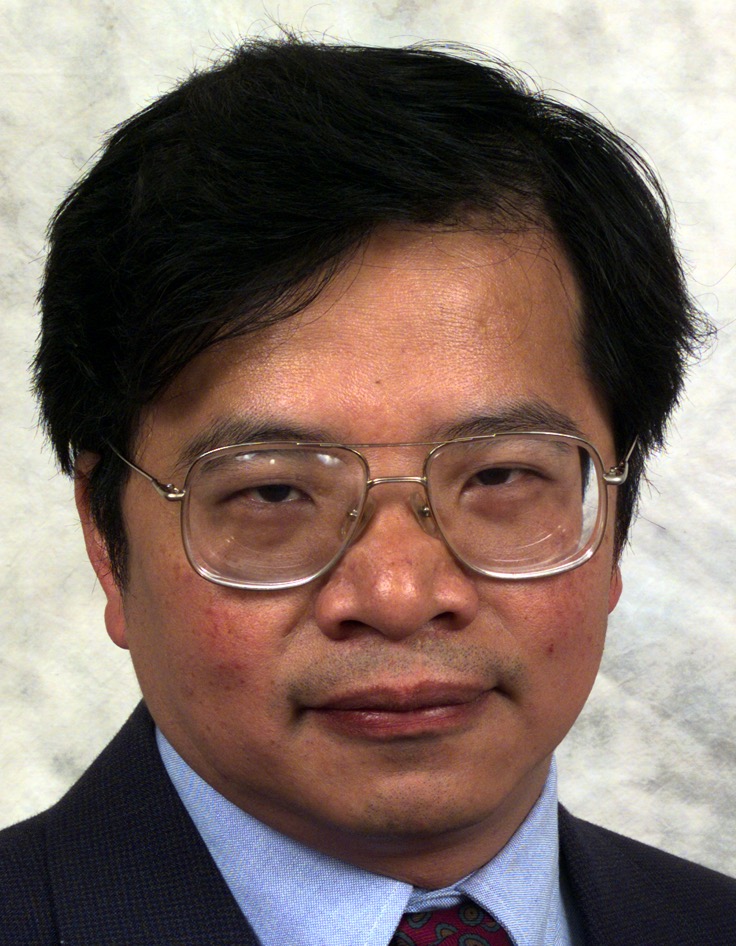

Chin-Hui Lee

"Deep Regression for Spectral Mapping with Applications to Speech Enhancement, Source Separation and Speech Dereverberation"

Chin-Hui Lee is a professor at School of Electrical and Computer Engineering, Georgia Institute of Technology. Before joining academia in 2001, he had accumulated 20 years of industrial experience ending in Bell Laboratories, Murray Hill, as the Director of the Dialogue Systems Research Department. Dr. Lee is a Fellow of the IEEE and a Fellow of ISCA. He has published 30 patents and about 600 papers, with more than 55,000 citations and an h-index of about 80 on Google Scholar. He received numerous awards, including the Bell Labs President's Gold Award for speech recognition products in 1998. He won the SPS's 2006 Technical Achievement Award for “Exceptional Contributions to the Field of Automatic Speech Recognition''. In 2012 he gave an ICASSP plenary talk on the future of automatic speech recognition. In the same year he was awarded the ISCA Medal in Scientific Achievement for “pioneering and seminal contributions to the principles and practice of automatic speech and speaker recognition''. His two pioneering papers on deep regression accumulated over 2000 citations in recent years and won a Best Paper Award from IEEE Signal Processing Society in 2019.

Mario Koeppen

"Metaverse as AI Embodiment: Techniques, Impact, and Research Opportunities"

Mario Köppen was born in 1964. He studied physics at the Humboldt-University of Berlin and received his master degree in solid state physics in 1991. Afterwards, he worked as scientific assistant at the Central Institute for Cybernetics and Information Processing in Berlin and changed his main research interests to image processing and neural networks. From 1992 to 2006, he was working with the Fraunhofer Institute for Production Systems and Design Technology. He continued his works on the industrial applications of image processing, pattern recognition, and soft computing, esp. evolutionary computation. During this period, he achieved the doctoral degree at the Technical University Berlin with his thesis works: "Development of an intelligent image processing system by using soft computing" with honors. He has published more than 150 peer-reviewed papers in conference proceedings, journals and books and was active in the organization of various conferences as chair or member of the program committee, incl. the WSC on-line conference series on Soft Computing in Industrial Applications, and the HIS conference series on Hybrid Intelligent Systems. He is founding member of the World Federation of Soft Computing, and also Editor of the Applied Soft Computing journal. In 2006, he became JSPS fellow at the Kyushu Institute of Technology in Japan, and in 2008 Professor at the Network Design and Reserach Center (NDRC) and 2013 Professor at the Graduate School of Creative Informatics of the Kyushu Institute of Technology, where he is conducting now research in the fields of multi-objective and relational optimization, digital convergence and multimodal content management.

Chunghui Kuo

"Deep Learning in Image Processing and Computer Vision"

Chunghui Kuo is the Editor-in-Chief at the Journal of Imaging Science and Technology and founder of Raycers Technology focusing on autonomous printing and intelligent environment sensing.

He received his PhD in Electrical and Computer Engineering from the University of Minnesota and joined Eastman Kodak Company in 2001. He was a senior scientist, a Distinguished Inventor, and served as an IP coordinator at the Eastman Kodak Company. He holds 52 US patents, where his patented automatic white-blending workflow received 2017 Canadian Printing Award. He had served as an industrial standardization committee member from 2005 to 2008 at the International Organization for Standardization (ISO).

He is a senior member of the IEEE Signal Processing Society and his research interests are in image processing, computer vision, blind signal separation and classification, and artificial intelligence applied in signal/image processing.

Hung-Yi Lee

"How far are we from a speech version of ChatGPT?"

Hung-yi Lee (李宏毅) is an associate professor of the Department of Electrical Engineering of National Taiwan University (NTU), with a joint appointment at the Department of Computer Science & Information Engineering of the university. His recent research focuses on developing technology that can reduce the requirement of annotated data for speech processing (including voice conversion and speech recognition) and natural language processing (including abstractive summarization and question answering). He won Salesforce Research Deep Learning Grant in 2019, AWS ML Research Award in 2020, Outstanding Young Engineer Award from The Chinese Institute of Electrical Engineering in 2018, Young Scholar Innovation Award from Foundation for the Advancement of Outstanding Scholarship in 2019, Ta-You Wu Memorial Award from Ministry of Science and Technology of Taiwan in 2019, and The 59th Ten Outstanding Young Person Award in Science and Technology Research & Development of Taiwan. He owns a YouTube channel teaching deep learning in Mandarin with about 100k Subscribers.

Neal Huang

"Energy-Efficient Generative AI Future: Low-Power Applications of ADI Max78000"

Currently, as a part of Analog Devices Inc. (ADI), a world-renowned semiconductor leader, I am thrilled to be a member of the dynamic team responsible for AI-related product research and development. Drawing upon my over 20 years of experience in semiconductor industry, I will bring a wealth of expertise in engineering and FAE roles.

ADI is renowned for its world-class analog, mixed-signal, and digital signal processing (DSP) technologies, which enable the development of a wide range of applications across various sectors. From healthcare and automotive to industrial, communications, and consumer electronics, ADI's products play a crucial role in shaping the future of these industries.

At ADI, we are committed to pushing the boundaries of AI technology and its applications across various industries. Leveraging my extensive background in programming software, firmware, and hardware circuit design, I am excited to contribute to the creation of cutting-edge AI solutions that cater to our customers' specific needs and drive technological advancements in the semiconductor field.